Innovative Technology – GelSight Sensor

The research group of Ted Adelson at MIT’s Computer Science and Artificial Intelligence Laboratory – CSAIL had unveiled an innovative sensor technology known as GelSight sensor, eight years ago which utilised physical contact with an object in providing an amazing comprehensive 3-D map of its surface.

The two MIT teams have now mounted Gelsight sensors on the grippers of robotic arms providing the robots with better sensitivity and agility. Recently the researchers had presented their work in twofold paper at the International Conference on Robotics and Automation.

Adelson’s group in one paper had utilised the data from the GelSight Sensor to allow a robot to judge the hardness of surfaces it tends to touch a crucial ability if household robots are to handle the daily objects. In the other Robot Locomotion Group of Russ Tedrake at CSAIL, GelSight Sensors were used to allow a robot to manipulate smaller objects than was earlier possible.

The GelSight sensor is said to be somewhat a low-tech solution to difficult issues comprising of a block of transparent rubber. The gel of its name is one face which is covered with metallic paint. When the paint coated face is pressed against an object, it tends to adapt to the objects shape

GelSight Sensor: Easy for Computer Vision Algorithms

Due to the metallic paint the surface of the object became reflective and its geometry became much easy for computer vision algorithms to understand. Attached on the sensor opposite the paint coated surface of the rubber block one will find three coloured light with a single camera.

Adelson, the John and Dorothy Wilson Professor of Vision Science in the Department of Brain and Cognitive Sciences has explained that the system is said to have coloured light at various angles and it tends to have this reflective material and on viewing the colours, the computer is capable of figuring out the 3-D shape of what that thing would be.

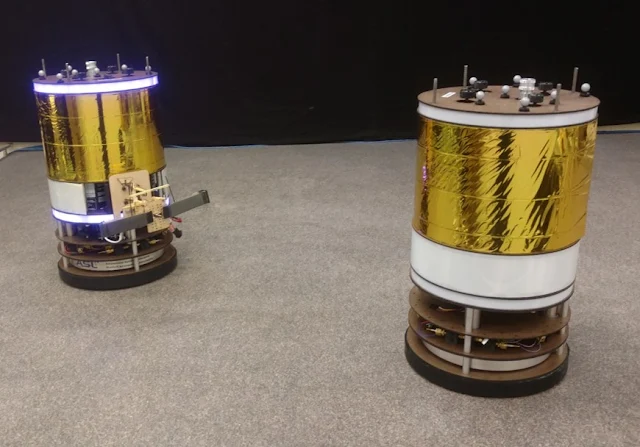

A GelSight sensor in both the groups of experiments had been mounted on one side of a robotic gripper which is a device to some extent like the head of pincer though with flat gripping surfaces instead of pointed tips.

As for an autonomous

robot, gauging the softness or hardness of objects is needed in deciding where and how hard to grasp them but also on how they would behave when moved, stacked or even laid on various surfaces. Moreover physical sensing would also assist robots in differentiating object which may look identical.

GelSight Sensor: Softer Objects – Flatten More

In earlier work, robot had made an effort to evaluate the hardness of object by laying them on a flat surface and gently jabbing them to see how much they give. However this is not how humans tend to gauge hardness. Instead our conclusion depends on the degrees to which the contact area from the object to our fingers seems to change as we press it.

Softer objects seem to flatten more increasing the contact area. This same approach had been utilised by the MIT researchers. A graduate student in mechanical engineering and first author on the paper from the group of Adelson, Wenzhen Yuan had utilised confectionary mould in creating 400 groups of silicon objects with 16 objects for each group.

In each group, the object seemed to have the same shapes though with altered degrees of hardness which was measured by Yuan utilising a standard industrial scale. Then GelSight sensor was pushed against each object physically and thereafter documented on how the contact pattern seemed to change over a period of time thereby producing a short movie for each object.

In order to regulate both the data format and keep the size of the data adaptable, she had extracted five frames from each movie, consistently spaced in time describing the formation of the object which was pressed.

Changes in Contact Pattern/Hardness Movement

Eventually the data was provided to a neural network that mechanically looked for connections between changes in contact patterns and hardness movements resulting in the system taking frames of videos as inputs producing hardness scores with high accuracy.

A series of informal experiments were also conducted by Yuan wherein human subjects palpated fruits and vegetables ranking them according to their hardness. In every occasion, the GelSight sensor -equipped robot came to the same rankings.

The paper from the Robot Locomotion Group originated from the experience of the group with the Defense Advanced Research Projects Agency’s

Robotics Challenge – DRC wherein academic as well as industry teams contended to progress control systems which would guide a humanoid robot through a sequence of tasks linked to theoretical emergency.

An autonomous robot usually tends to utilise some types of computer vision system in guiding its operation of objects in its setting. Such schemes tend to offer reliable information regarding the location of the object till the robot picks the object up.

GelSight Sensor Live-Updating/Accurate Valuation

Should the object be small most of it will be obstructed by the gripper of the robot making location valuation quite difficult. Consequently at precisely the point where the robot needs to know the exact location of the object, its valuation tends to be unreliable.

This had been the issue faced by the

MIT team at the time of the DRC when their robot had picked up and turned on a power drill. Greg Izat, a graduate student in electrical engineering and computer science and first author on the new paper had commented that one can see in the video for DRC that they had spent two or three minutes turning on the drill.

It would have been much better if they had a live-updating, accurate valuation of where that drill had been and where their hands were relative to it. This was the reason why the

Robot Locomotion Group had turned to GelSight. Izatt together with his co-authors Tedrake, the Toyota Professor of Electrical Engineering and Computer Science, Aeronautics and Astronautics and Mechanical Engineering, Adelson together with Geronimo Mirano, another graduate student in the group of Tedrake had designed control algorithms which tends to utilise computer vision system in guiding the gripper of the robot towards a tool and thereafter turn location estimation over to a GelSight sensor when the robot is said to have the tool in hand.