When it comes to the future Internet research, making improvement in the existing architecture so that it meets different types of requirements becomes the key topic. Professor Xu Ke and his group from Tsinghua National Laboratory for Information Science and Technology (TNList), Department of Computer Science and Technology, Tsinghua University have set out together to find a solution to this problem.

The team has been able to develop a novel evolvable Internet architecture framework under the evolvability constraint, the manageability constraint and economic adaptability constraint. According to them, the network layer can be used to develop the evolvable architecture under these design constraints. Their teamwork has been published SCIENCE CHINA Information Sciences.2014, Vol 57(11), with the title, "Towards evolvable internet architecture-design constraints and models analysis".

The total concept:

With nearly 1/3 of the total world’s population having access to internet, it has become one of the strongest and the global means of communication. However, in a deeper sense, internet is no longer a communication channel but now it has been extended to become a communication and data processing storage area. Looking at the changes it is difficult for the existing Internet architecture to adapt.

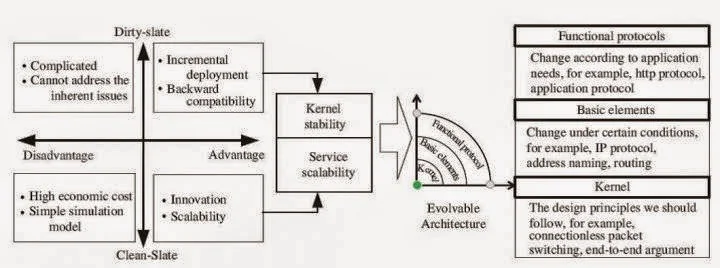

Even though there are corresponding researches about future Internet research depending on the dirty-slate approach and the clean-slate approach, for more than years the two mainstream architecture development ideas have been conducted in many countries but still these approaches cannot fully solve the current Internet architectures problem efficiently.

As far as the dirty-slate approach is concerned, it can only solve a small range of local issues on the Internet but it also makes the internet architecture evolve into a complex and cumbersome structure. But when it comes to the clean-slate approach, there is serious deployment and transition issues, which becomes totally uncontrollable in the current architecture.

The evolvable Internet architecture combines the advantages of both the clean-slate approach and dirty-slate approach also ensures that the core principal is not hampered.

The evolvable architecture is more flexible when compared to the dirty-slate approach. Even the evolvable architecture becomes more stable when compared to the clean-slate approach. There are three different types of layers in the evolvable architecture.

Apart from ensuring that the construction of the evolvable architecture is in conformity with the design principles, three constraints are required during the development stages. They are the economic adaptability constraint, evolvability constraint, and the management constraint. This is all about the next generation of the internet.

The development and history:

The collaborative efforts from many researchers from different institutes and universities are behind the discovery and development of this evolvable architecture. This project got the support from a grant from 973 Project of China (Grant Nos. 2009CB320501 & 2012CB315803), NSFC Project (Grant No. 61170292 & 61161140454), New Generation Broadband Wireless Mobile Communication Network of the National Science and Technology Major Projects (Grant No. 2012ZX03005001), 863 Project of China (Grant No. 2013AA013302), EU MARIE CURIE ACTIONS EVANS (Grant No. PIRSES-GA-2010-269323), and the National Science and Technology Support Program (Grant No. 2011BAK08B05-02).